Microcode optimization...

Found some time to hack on a small SoC for the iCE40 board and came across the light8080 project at opencores. This is a rather small (ca. 230 LUTs + BRAM) microcoded implementation of the intel 8080 CPU in VHDL and Verilog including a UART and memory interface; the Verilog code synthesizes nicely using yosys after some small fixes. The 8080 is quite attractive as a soft core for a small FPGA, since it was used in early CP/M systems (so a lot of software is available) as well as other interesting systems, such as the original DEC VT100 terminal (JS emulator of the VT100 hardware).

While taking a closer look at the microcoded CPU implemtation (I haven't tried running the SoC on the FPGA so far), I think I found some potential for optimization. The 8080 XCHG instruction swaps the contents of the 16 bit register DE with the contents of the HL register.

The original implementation looks like this (file ucode/light8080.m80, l. 193ff.) -- and the author even notices that the original intel implementation is 6 T cycles faster (the instructions shown here take 12 cycles, the remaining four are used by instruction fetch and decoding):

__code "11101011"

__asm XCHG

// 16 T cycles vs. 10 for the original 8080...

T1 = _d ; NOP

NOP ; _x = T1

T1 = _e ; NOP

NOP ; _y = T1

T1 = _h ; NOP

NOP ; _d = T1

T1 = _l ; NOP

NOP ; _e = T1

T1 = _x ; NOP

NOP ; _h = T1

T1 = _y ; NOP

NOP ; _l = T1 ; #end

A small, well-known trick can accelerate this quite a bit: using the XOR swap algorithm, the new microcode should be as fast as the original 8080:

__code "11101011"

__asm XCHG

// swap d<->h; 10 T cycles? using XOR swap algorithm

T1 = _d ; NOP

T2 = _h ; _d = XRL ; #fp_rc, #clr_acy

T1 = _h ; NOP

T2 = _d ; _h = XRL ; #fp_rc, #clr_acy

T1 = _d ; NOP

T2 = _h ; _d = XRL ; #end, #fp_rc, #clr_acy

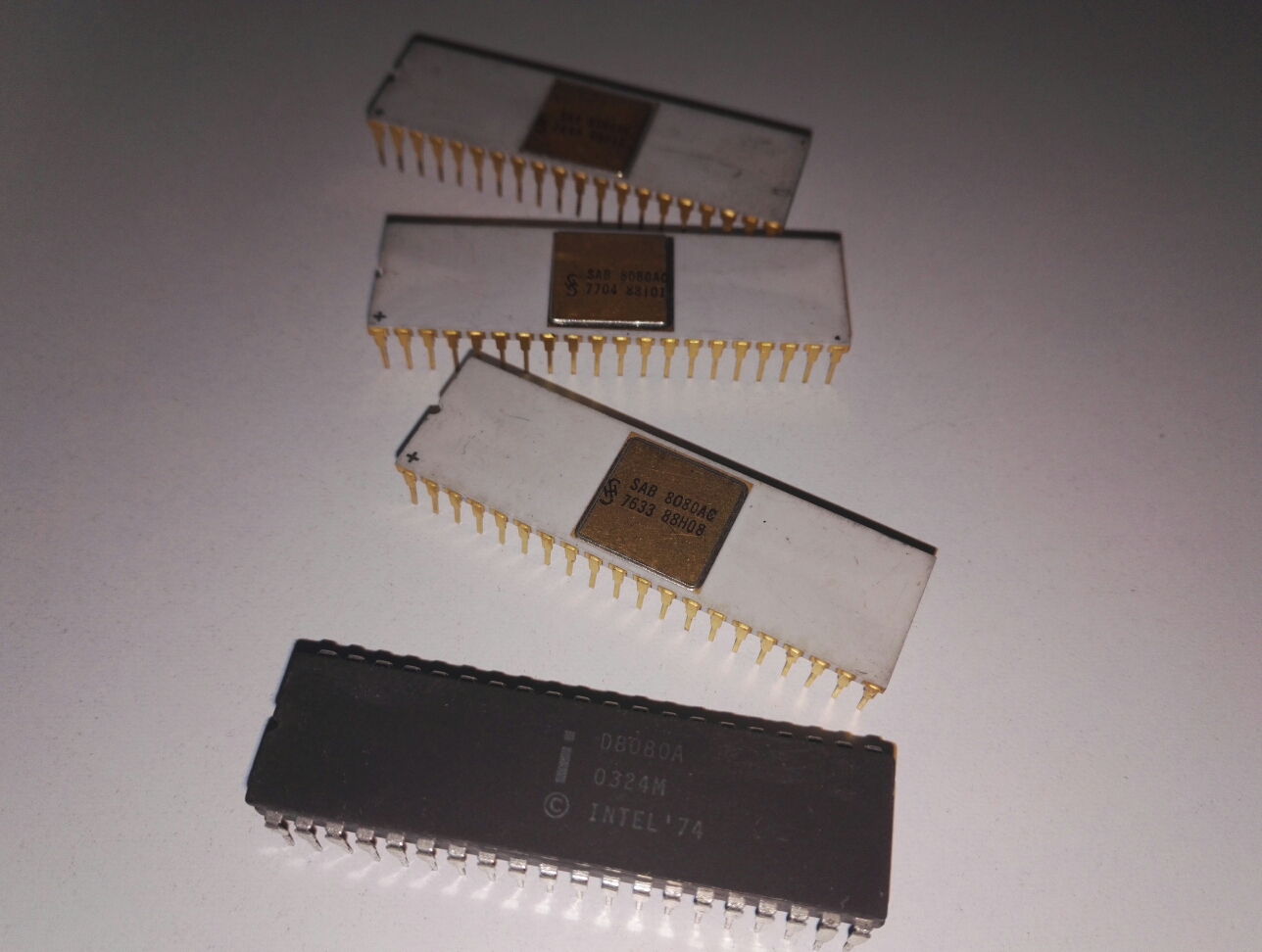

This should only take 10 T cycles (including fetch and decode). It would be interesting to see if intel used the same trick in the original 8080. There's an 8080 hidden in one of the drawers in the lab, but considering the effort required to decap and reverse engineer the chip, I think that Ken Shirriff might find out first :-).

Beware -- I haven't tested it yet (but also not proven its correctness...)!