Scopetrex

Since I started hacking on computer hard- and software in the 1980s, Motorola's 6809 processor is one of my favorite 8-bit CPUs. The 6809 is the last generation of Motorola's 8-bit CPU family, which started with the 6800 in 1974 and also was used in a number of microcontrollers of the 6805, HC11 and 9S12 families. The latter are still used in automotive applications today. If you know the 6502, the 6809 feels quite familiar, since it has an identical bus interface and very similar assembler programming model without some of the constraints of the 6502 such as an 8-bit stack pointer or a lack of 16-bit address registers. This is not really surprising, since a number of Motorola 6800 engineers left the company to develop the 6502 and then joined MOS.

However, the 6809 only came to the market in 1978 - only a year before the 68000 - and thus was quite late (and also rather expensive compared to the 6502 and Z80). Only a few home computers used the processor, e.g. the Dragon 32 and 64, the Tandy Color Computer and Thompson's MO5 and TO7.

Another system which used the 6809 was a quite special game console, the Vectrex. Instead of a standard raster-based screen, the Vectrex used its CRT as a vector display by controlling the X and Y deflection as well as the intensity of the CRT's electron beam directly. This is done by generating analog voltages for the X and Y position using an 8-bit digital-to-analog converter (DAC0808). Since DACs were quite expensive back then, the single DAC analog output is multiplexed using a CD4052 analog multiplexer to the various connectors. The rest of the Vectrex hardware is rather simple. A 6522 VIA connects to the DAC and some control functions and a AY3-8912 chip generates sound and provides another 8-bit port to interface to the Vectrex controllers. The remaining chips are an 8 kB ROM, 1 kB of SRAM (32 kB in the Scopetrex), some 74-series glue logic and a number of op-amps and other analog chips to generate the analog signal outputs.

Vectrexes were only built for about two years and unfortunately have become highly sought after (read: expensive) collector's items. Luckily, since the Vectrex uses only standard components, it is rather simple to build a clone. The software situation is also quite nice, since Jay Smith, then head of Smith Engineering (the designer of the Vectrex), allowed new hard/software development on a fee- and royalty-free basis and has also allowed duplication of the original Vectrex software on a not-for-profit basis.

Such a Vectrex clone is available in form of the Scopetrex by TubeTime. This is not a 100% replica since it uses a different PCB form factor and a 32 kB SRAM instead of the original 1 kB, but is perfectly compatible with all original software. It's a good project to get started with a more complex hardware build if you have some soldering experience and most of the parts are still available new from vendors such as Digikey or Mouser. The 6809 CPU (you will need at least the 1.5 MHz version 68A09, a 68B09 will also work fine - both the versions with internal clock generator and the one without that has the "E" suffix can be used) and the sound chip (a AY3-8910 or YM2149 can also be used) can be easily found on aliexpress.

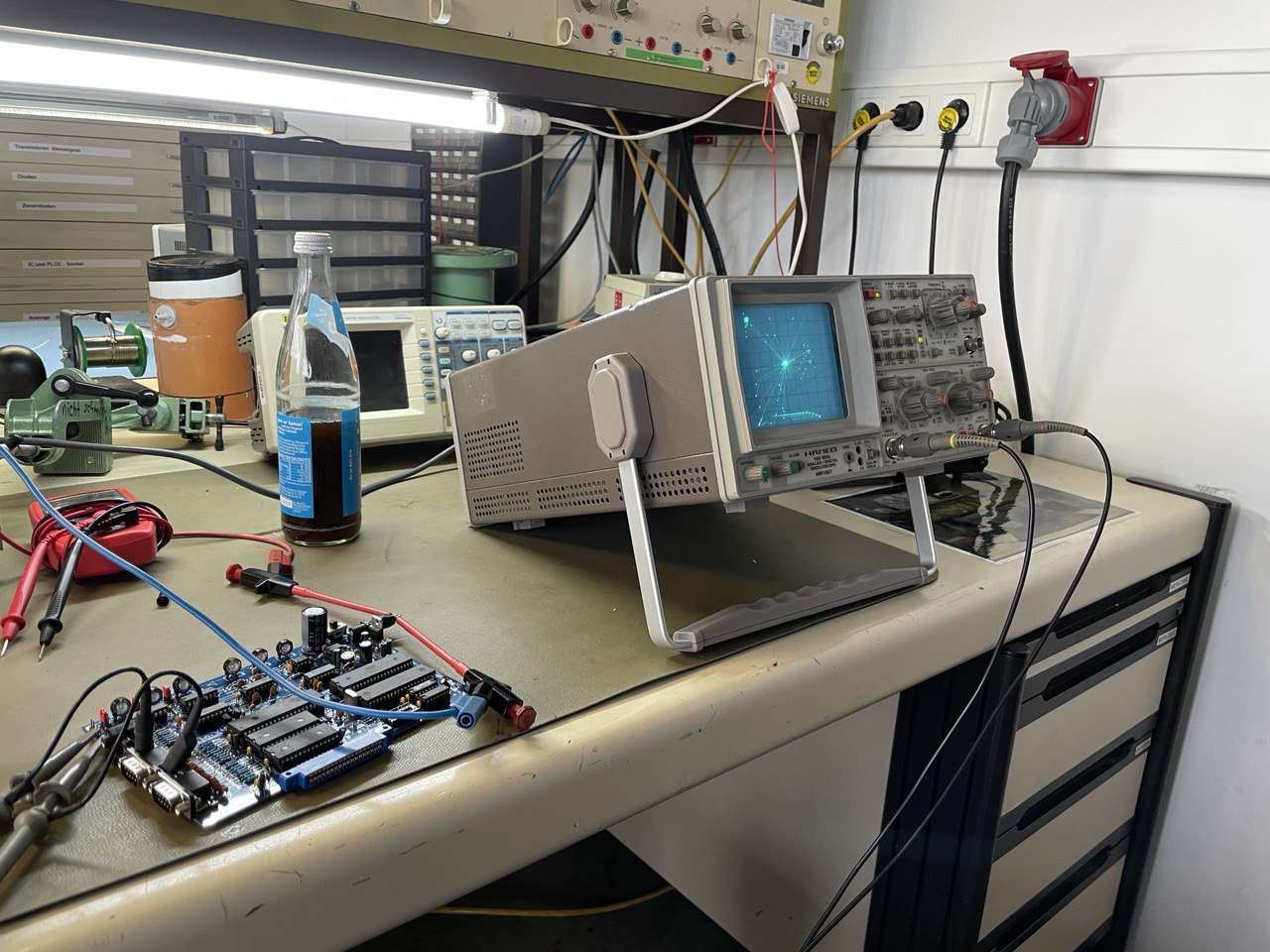

My Vectrex build worked out of the box, now I only need to find a suitable vector display. You can use an oscilloscope in X-Y mode, but most modern scopes no longer feature a Z (intensity) input, so the display is disturbed by vectors which should not be drawn (intensity = 0). If you have an old analog Hameg 203, this will work nicely... A picture of the successful bringup is shown below.

By concidence, a colleague in Germany has also started building Scopetrexes. Small world...

Tags: Scopetrex, Vectrex, Clone, Motorola, 6809

Lisp in Small Processors

While AI is all the rage again, the previous AI wave was focusing on languages like Lisp and Prolog and even built hardware with special processors optimized to execute Lisp code, made by companies such as Symbolics, Lisp Machines, Inc., and the Explorer Series by Texas Instruments. The machines used OSes written in Lisp and provided the user with large, bitmapped screens to operate a mouse-based GUI and networking.

Lisp machines originated at MIT's AI Lab and were influential for a number of reasons. A certain Richard Stallman was a graduate student at the AI Lab and unhappy with the commercialization of the Lisp technology by LMI and Symbolics, started to develop Free Software such as Emacs and the gcc compiler and started the Free Software Foundation. The LMI LAMBDA machine was based on Western Digital's NuBus bus system and was subsequently acquired by TI to create the Explorer.

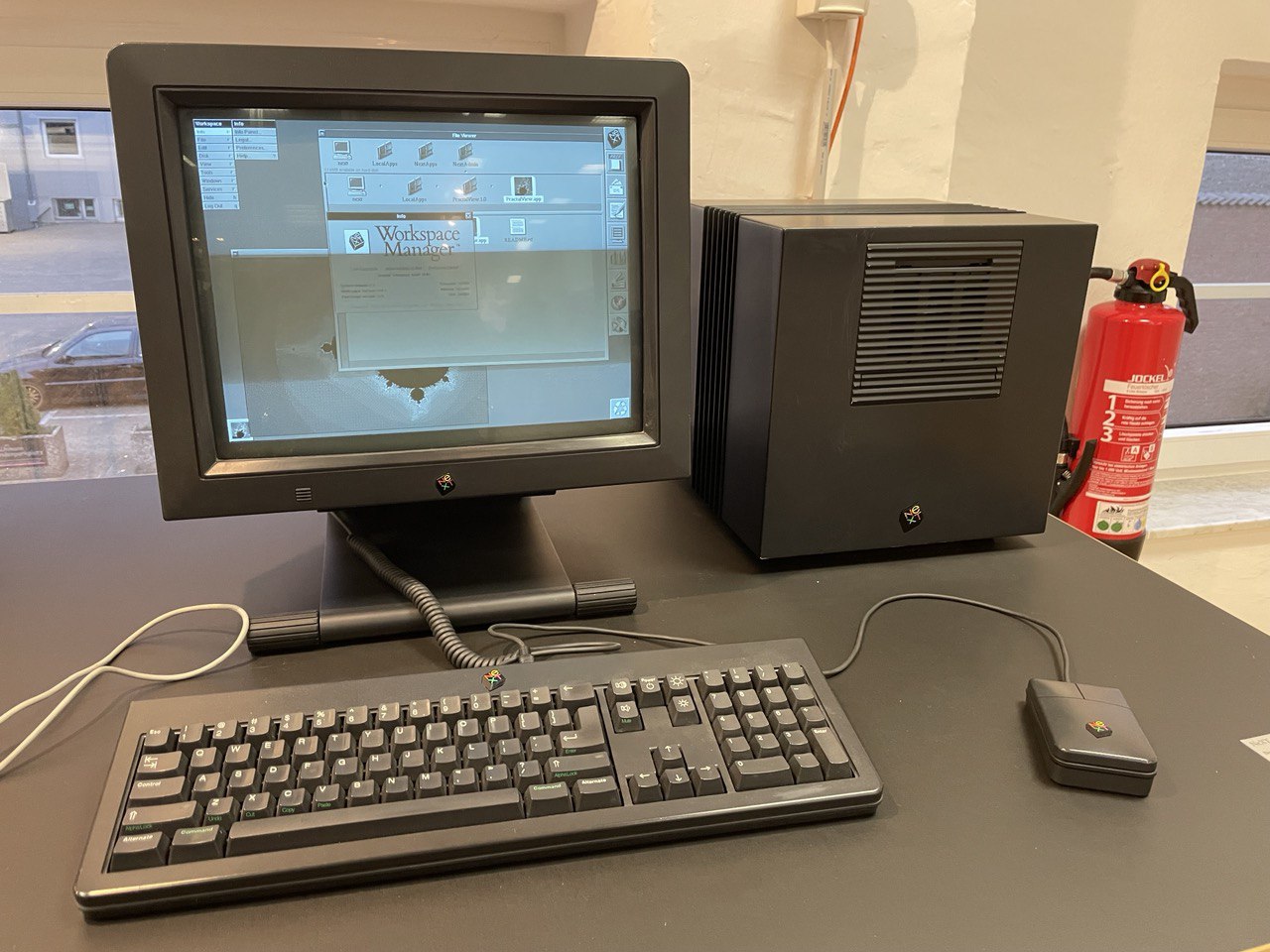

The NuBus was, in turn, used by Motorola 68k-based Macintosh and early PowerPC systems as well as the NeXT Cube. These NuBus-based Macs were subsequently used to create personal Lisp workstations which employed a VLSI integrated Lisp chip and used the Mac as I/O system. Both Symbolics and TI offered such a system, MacIvory and MicroExplorer, respectively. The latest development is an emulator of Symbolics Lisp machines, the Genera OS.

If you own a MacIvory or MicroExplorer card you want to sell, please get in touch

However, there were also efforts to implement Lisp on smaller machines, including popular 8-bit CPUs. The August 1979 issue of BYTE magazine was dedicated to Lisp and did not only provide a lot of background on the language and its application, but also published the implementation of a Lisp interpreter written by S. Tucker Taft in assembly for the Motorola 6800. Unfortunately, the space in that issue was constrained, so only a part of the interpreter source code was published and the complete code could be ordered from BYTE. 45 years later, this is no longer possible, so I thought the code was lost...

Thanks to the power of Mastodon, Pablo Marx (thanks a lot!) managed to find the original listing of that Lisp implementation and sent me the scanned document as PDF as well as a link to his github repository with the 6800 Lisp code.

If you are interested to know how a low-level implementation of a Lisp VM, including the REPL, lambdas, and a garbage collector works, I can recommend taking a look at this article and the accompanying source code. I'm thinking of porting the VM to my Scopetrex Vectrex game console clone. This is a fascinating piece of hardware based on Motorola's 6809 CPU, the successor of the 6800, which is not binary compatible. However, the assembler source code can be easily converted. The Vectrex and the Scopetrex clone use a vector CRT display, which makes developing software for the system quite interesting.

Automatic conversion of the assembly code should also be possible, e.g. there is a 6502 to 6809 converter.

Tags: Lisp, Motorola, BYTE, 6800

Computer History

As some of my regular readers (if these exist...) may already know, I have a certain fondness for old computers and their history.

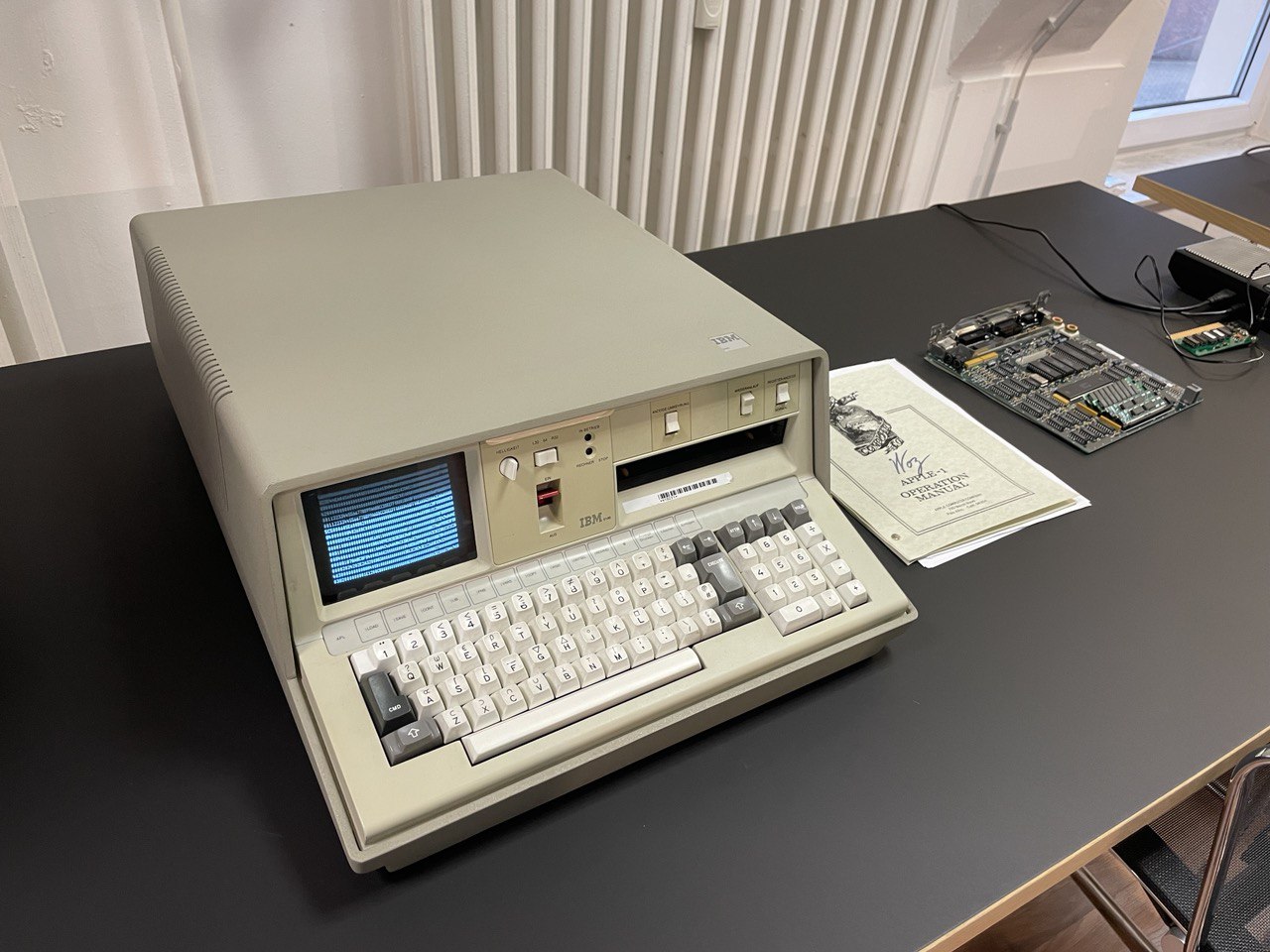

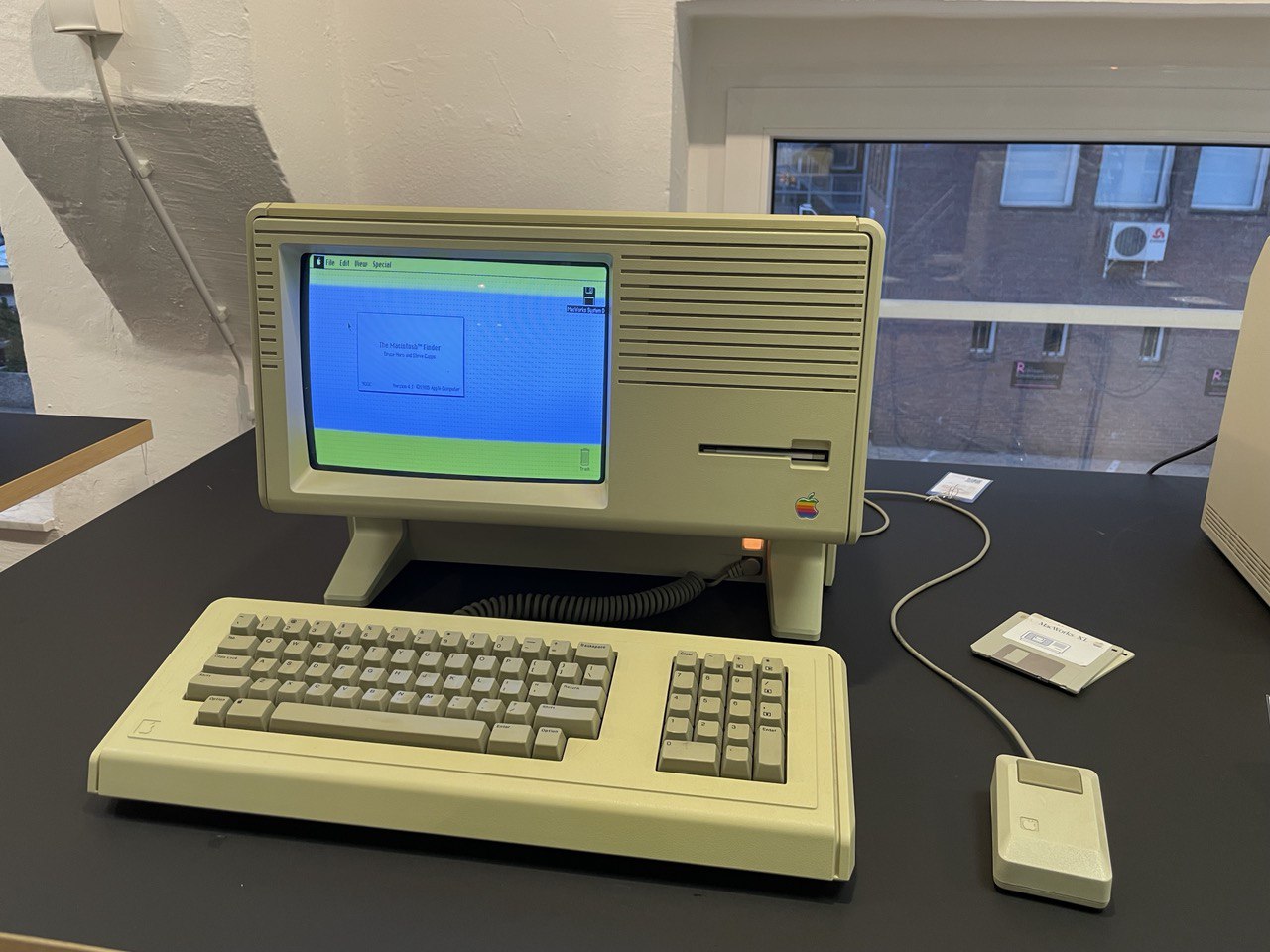

In February, members of the German Computer Society's SIG on Computer History met at the University of Bonn. We had a number of very interesting talks from different angles of computer history - preservation and museums, a film project, the restoration process of Zuse's Z1 mechanical computer, and program listings in 1980's home computer magazines. I contributed my report on reproducing CS research from the 1990s.

A big thank you to Stefan Höltgen for organizing the event!

One highlight was the visit to the Arithmeum, the University's computer museum. In addition to an impressive collection of mechanical computing machines (including an Enigma!), the museum also features an exhibition of electronic computers (tours on demand). I thought some pictures might be interesting...

Tags: GI, InfoHist, Computergeschichte, Bonn, Arithmeum

Bamberg at Night

And some shots of Bamberg at night...

Some Impressions of Bamberg

Just some pictures of Bamberg I took throughout the past year.

One Year Later...

Big news, a bit late. Almost exactly one year ago (in late March 2022), I returned to Germany to start my new job as full professor and head of the System Programming research group at Bamberg University in the Faculty Information Systems and Applied Computer Sciences.

In Bamberg, for now I concentrate on teaching master level courses, projects and seminars on operating systems engineering and virtualization as well as research on microkernel design for modern architectures and related topics - with a focus on implementing systems on the RISC V architecture.

We also started to gain a lot of experience implementing system and bare-metal code in Rust with great results and will continue to offer Rust as an alternative implementation language to C (along with an optional Rust programming course).

Among many other things, during the previous year I was a co-organizer of the Third Winter School on Operating Systems (WSOS 2023) and acted as program chair for the 9th International Workshop on Plan 9, which takes place in Waterloo, Ontario, Canada, from April 21st-23rd, 2023.

Expect more updates soon including a report from iwp9 – I hope it won't take more than two years for another update...

Tags: Bamberg, University, WIAI, SYSNAP

Exploring Binary Translation on Apple Silicon (part 1)

You have probably read about Apple's new Macs which switched from Intel CPUs to Apple's new in-house designed M1 "Apple Silicon" chip, which is based on the 64-bit ARM (Aarch64) instruction set architecture (ISA).

When a company does a transition like this, one of the challenges is to ensure that the existing base of software products continue to work. Since the Aarch64 ISA is not compatible to the old x86-64 bit architecture, applications have to be recompiled (this would not be an insurmountable problem if all applications were open sourced) to enable running then on ARM. However, some companies are slow to recompile their software and some others might even have lost the source code to their software -- this happens far more often than you think -- or went out of business years ago. Nevertheless, there will be a customer out there who relies on such an old piece of binary-only x86 software and you don't want to discourage them from buying your shiny new hardware...

Thus, for a transition period, other solutions to enable running old, binary incompatible software are required to keep your users happy. This is what this blog post series is about, we will focus on Rosetta 2 in upcoming posts. In the first part of this blog post series, I will provide you with a bit of background on emulation and binary translation.

Interpreting Emulators

One very simple approach is to create an emulator that interprets the x86 machine instructions one after the other in order of the program's control flow and dynamically maps them to instructions the new CPU can understand, often building a model of the emulated CPU in C or assembly.

An example of how an interpreting emulator implements the x86 instruction "ADD AL, imm8" (add an 8-bit immediate value to the accumulator low byte) might look like this (caution, this is untested pseudo-code written for legibility):

while (!end) {

instruction = get_opcode(regs.pc);

switch (instruction) {

case 0x04: // opcode byte for ADD AL, imm8

// fetch operand byte

operand = get_byte(regs.pc+1);

// update result

regs.ax = (regs.ax & 0xFFFFFF00) | (((regs.ax & 0xFF) + operand) & 0xFF);

// set condition flags

regs.flags.cf = ...; // carry

regs.flags.of = ...; // overflow

// update program counter

regs.pc = regs.pc + 2;

break;

case 0x05: // opcode byte for ADD EAX, imm32

...

}

}

It's probably easy to see that the interpreted emulation of this simple opcode in C requires quite a bit of overhead. In the emulator code, things that run in parallel on real hardware, such as updating the processor flags and the PC while the result of the addition is calculated, have to be executed serially and thus take much more time compared to execution in hardware.

The interpreter also has to assume the worst case that all of the calculated results are required, so it always has to calculate the values of the carry and overflow flags even though their values are never read or directly overwritten in subsequent code -- it has no knowledge of future instructions.

This mode of emulation is especially inefficient when loops are to be executed. Here, the same set of cases of our switch statement would have to be executed over and over again.

Dynamic Binary Translation -- Just-in-Time

To improve the execution performance for code that is executed multiple times in one program run, it is useful to cache the results of a translation process, i.e. the instructions of the emulator that are executed while a program is emulated.

This is the basic idea behind just-in-time translation (JIT). Instead of executing instructions whenever an instruction to be emulated is encountered, the JIT compiler instead generates a sequence of machine instructions to emulate the instruction and stores this sequence in a translation buffer. After the translation of some instructions, the JIT system jumps to the code.

The problem with this is how many instructions are to be translated before the JIT system can start to execute them. To get an idea of what is happening here, we need to take a look at a fundamental concept of program structure: basic blocks.

Wikipedia defines a basic block as follows:

In compiler construction, a basic block is a straight-line code sequence with no branches in except to the entry and no branches out except at the exit.

Accordingly, the code execution inside of a basic block is predictable; since there is no control flow except at the end, we can always translate all of the instructions inside of a basic block at the same time (this can be made even more efficient through hyperblocks and methods such as trace scheduling [1]). So some pseudo-code for a JIT compiler might look like this (note this is not too dissimilar from our interpreter code above):

pc_type translate_basic_block(pc_type pc) {

// mark basic block as translated

basic_block_is_translated(pc);

// start translation of instructions from the start of the basic block until the first branch instruction

do {

instruction = get_opcode(pc);

switch (instruction) {

case 0x04: // opcode byte for ADD AL, imm8

// fetch operand byte

operand = get_byte(pc+1);

// generate code to calculate the result

emit(INSN_ANDI, nativeregs[REG_AX], 0xFFFFFF00);

emit(INSN_ANDI, nativeregs[REG_TMP1], nativeregs[REG_AX], 0xFF);

emit(INSN_ADD, nativeregs[REG_TMP1], nativeregs[REG_TMP1], operand);

emit(INSN_OR, nativeregs[REG_AX], nativeregs[REG_AX], nativeregs[REG_TMP1);

// emit code to calculate the condition flags

emit(....); // carry

emit(....); // overflow

// update the program counter

pc = pc + 2;

break;

case 0x05: // opcode byte for ADD EAX, imm32

...

}

} while (type(instruction) != BRANCH);

// emit an instruction to return to the JIT system's main loop

emit(INSN_RET);

// return next emulated PC

return(next_pc);

}

In this code I make a number of assumptions which might be problematic in a real-world

JIT compiler. One assumption is that the target machine has more registers than the

machine to be emulated. In the piece of code above, a temporary register REG_TMP1 is

used to hold an intermediate result. A real-world JIT compiler would try to apply

some optimizations, e.g. using register allocation methods known from compiler construction,

to reduce the amount of registers used in the translated code.

Another simpification here is that before returning from the JITed piece of native code, there needs to be some sort of indication at which location in the emulated code the execution should continue. This could be implemented so that the code emulating the branch instructions would write the following PC value to a special register.

The JIT compiler would then run a loop like this:

pc = entry_point();

while (!end) {

// check if basic block is already translated

if (! basic_block_is_translated(pc)) {

translate(pc);

}

// call the generated native instructions

pc = call(translation_buffer_address(pc));

}

This code has no benefits if the program to be translated has no loops (or functions which are called multiple times); in fact, it would possibly imply some overhead since code is first translated and then executed only once. However, as soon as a basic block is executed multiple times, we only need to translate this basic block once and then only call the code repeatedly.

Possible Optimizations

This approach can be optimized in a number of ways. The first problem with JIT translation is that the translation process requires additional memory to store the native code. One can reduce the memory overhead here by evicting translations of basic blocks from the translation buffer after some time. Of course, this brings along all the well-known problems of cache replacement algorithms; so if an evicted basic block translation is needed again, the related code has to be retranslated.

The JIT approach also has some benefits. One of them is that the translation process is dynamic, so it follows the execution of the currently emulated program instance. This, if a path through a program is never taken -- for example, you only edit a document in a word processor but do not print it, the printing code is never executed in this specific program run -- the related code is never translated, saving time and memory space.

Practical Problems with JIT on Modern Computers and Operating Systems

Implementing a JIT compiler today is a bit more complex than the pattern described above, of course. I'll describe a selection of real-world problems below.

On modern operating systems, a process is not allowed to

modify its executable code. This so-called "W^X" (write XOR execute -- the CPU

can either write to a page or execute code from it, but not both) protection of code

(text segment) memory pages serves as a protection against malware, which often

tries to overwrite existing program code, e.g. by exploiting a buffer overflow,

in order to change the instruction, and thus the behaviour, of the attacked program.

Accordingly, some additional calls to the OS (e.g. mprotect on Unix) and possibly

special capabilities are required so that a JIT compiler can also execute the

code it generated.

Exception handling is another problem. Whenever a program does something out of the ordinary, e.g. it tries to divide by zero or attempts to read from an invalid memory address, the JIT translated program has to detect this condition and handle it accordingly. This exception handling can cause significant overhead.

For the multicore processors available today, another problem is the semantics of parallel execution of threads of a process on multiple codes. I won't go into details here (this might be an interesting topic for a future blog post), but differences in memory access ordering for concurrent reads and writes of different cores create problems that might change the semantics of a translated multithreaded program that is being executed on multiple cores. A correct implementation of a different memory ordering in software required significant overhead. Spoiler Apple has implemented additional functionality for different store order semantics in Apple Silicon cores to make emulation more efficient.

Much more information on approaches to JIT compilation and possible optimizations can be found in the great book on virtualization by Smith and Nair [2].

Static Binary Translation

One problem with JIT translation is that all the work invested to translate (parts of) the program is futile after the end of the program's execution. Some binary translation systems, such as digital's FX!32 [3], which JIT translated x86 code to code for digital's 64-bit Alpha AXP processor, cached translation results beyond the runtime of a program. The Alpha was essentially in the same position as Apple Silicon is today -- its performance was significantly higher than the performance of x86 processors of the time, so FX!32 enabled fast execution of JIT translated x86 binaries on that platform.

Can we improve this somehow? Let us compare the translation process of code to the translation of natural languages. On the one hand, you can hire a (human or AI) language translator to translate, for example Norwegian to German, as the words are spoken or read. This is interpretation which, of course, has significant overhead.

JIT translation for natural languages would require translating larger blocks, e.g. paragraphs, one at a time and cache the results. Since text does not tend to repeat that often in spoken or written texts, this unfortunately breaks the analogy a bit ;-).

For natural languages, of course, the problem of efficiency has been solved for many millenia. What you can do is to translate the foreign language text once and write down the translated result in a book or essay. After that, you don't need the original any more and can refer to the translated text. However, there are some problems with this, for example if the translated text is imprecise or ambiguous (I think readers of the Bible will have quite some experience with this) which require referencing the original text for clarity.

We can try to do the same to translate programs from one binary representation to another one. This is called static binary translation and comes with its own set of problems. For example, similar problems to translating books can also show here and require referencing the original binary. We will take a look at static binary translation in an upcoming blog post.

There's more to emulation and translation

Emulating the CPU is usually not sufficient to execute a program. One important question is if you only want to implement user mode programs or also run a complete operating system in emulation.

For user mode emulation, the overhead emulating the CPU itself is lower, since user mode programs only have access to a restricted subset of a CPU's functionality. However, all interaction of the program with the underlying OS has to be emulated. For similar systems (e.g. different Unix systems or even the same OS running on a different CPU platform, as it is the case for macOS) this can be relatively straightforward. Supporting the system calls of a different OS is much more work. This is, for example, implemented in the Windows Subsystem for Linux (WSL) which allows the execution of Linux user-mode programs on Windows 10 (but does not perform binary translation, since the source and target platform are both x86-64)

In case you also want to run a complete OS (or other bare-metal code) for a different architecture, you need to reproduce the behaviour of the underlying hardware of the complete system, including I/O, the memory system, possible coprocessors, etc. This comes with a lot of overhead, but is routinely done e.g. to emulate vintage computer systems or game consoles.

The next blog post in this series will have a closer look at static binary translation and the related problems before we dig deeper into Rosetta 2.

References

- [1] Joseph A. Fisher. Trace Scheduling: A Technique for Global Microcode Compaction. IEEE Transactions on Computers. 30 (7): 478–490. doi:10.1109/TC.1981.1675827.

- [2] Jim Smith and Ravi Nair. Virtual Machines -- Versatile Platforms for Systems and Processes. 1st Edition 2005. Morgan Kaufmann. ISBN-13: 978-1558609105

- [3] DIGITAL FX!32: Combining Emulation and Binary Translation from the Digital Technical Journal, Volume 9 Number 1, 1997. (pdf)

Tags: Apple Silicon, M1, Rosetta 2, binary translation, ARM, emulation, JIT

RISC V operating systems

Some of my master project students will work on porting different operating systems (Oberon, Plan 9 and Inferno) to 32-bit RISC V-based systems. The limitation to 32-bit means that it should be possible to run the ported systems on small FPGA-based boards, such as the ultraembedded RISC V SoC running on a Digilent Arty board (based on a Xilinx Artix 7 FPGA) or a Radiona ULX3S, which uses a Lattice ECP5 FPGA and is supported by the open source symbiflow Verilog toolchain.

To provide my students with a bit of example code, an existing more-or-less complex OS running on RISC V would be nice to have. One well executed and documented teaching OS is MIT's xv6, a reimplementation of 6th edition Unix in modern C which is missing some features not that relevant for an OS course (or left as an exercise for the students).

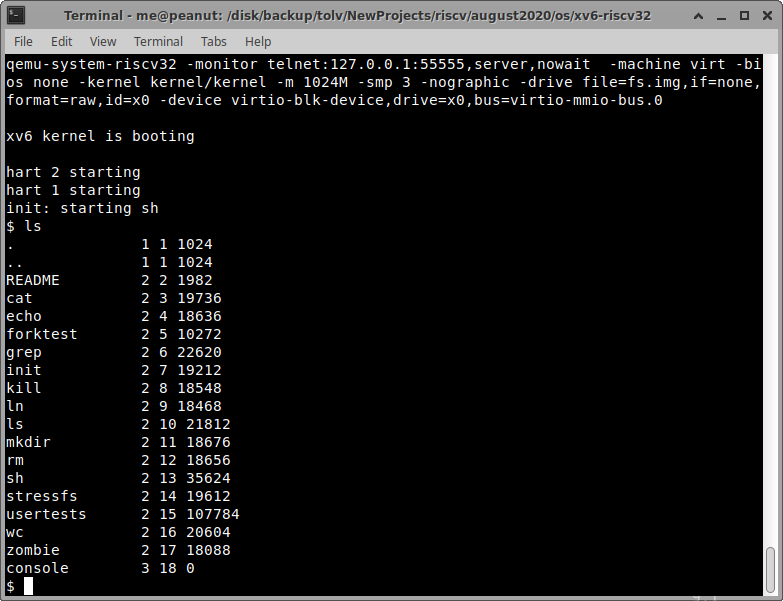

There is a port of xv6 available for 64-bit RISC V. This doesn't work out of the box for 32-bit RISC V (RV32I), since the size of data types and registers is obviously different and the virtual memory management is different (sv32 instead of sv39). Thus, I created a RV32I port of xv6 on a rainy afternoon here in Trondheim. This version currently runs in qemu, I'm working on a port to the ultraembedded RISC V SoC. Here's the most boring screenshot ever ;-).

My small project seems to have found at least one interested person. Jim Huang has re-based my port to have proper diffs against the 64-bit xv6 port and might use it for one of his courses:

Nince to see this is useful for people on the other side of the world ;-).

Tags: RISC V, xv6, operating system